A Spanish town has gained international attention after several young schoolgirls reported receiving fake nude images of themselves created using an easily accessible “undressing app” powered by artificial intelligence. The incident has sparked a larger conversation about the potential harm caused by these tools. “Today a smartphone can be considered as a weapon,” said Jose Ramon Paredes Parra, the father of a 14-year-old victim. “A weapon with a real potential of destruction and I don’t want it to happen again.”

Spanish National Police have identified over 30 victims between the ages of 12 and 14, and an investigation has been ongoing since September 18. While most of the victims are from Almendralejo, a town in the southwest of Spain, authorities have also found victims in other parts of the country. The perpetrators, who knew most of the victims, used photos taken from their social media profiles and uploaded them to a nudify app, according to police.

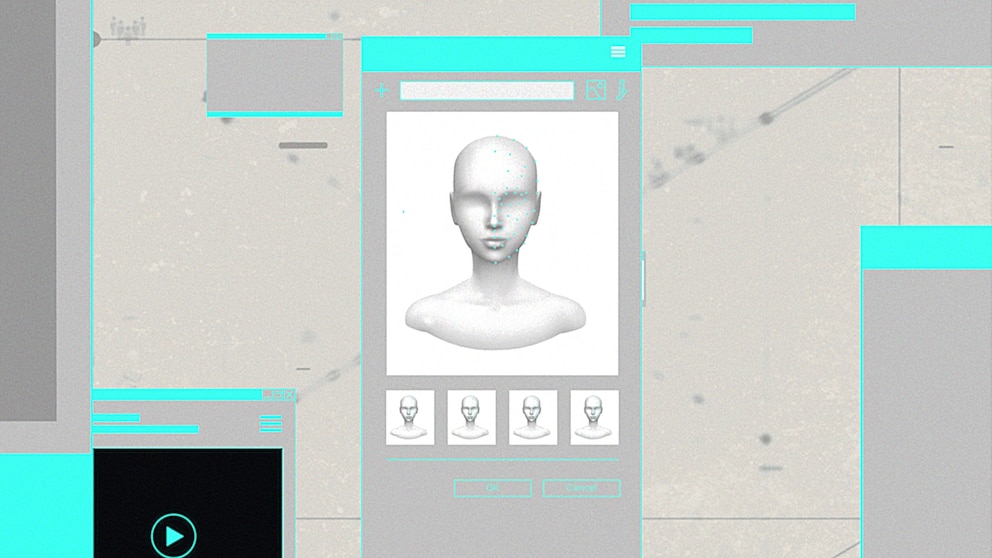

The app in question is an AI-powered tool designed to remove clothing from a subject in a photo. It can be accessed through Telegram or by downloading an app on your phone. The perpetrators, who are also minors, created group chats on WhatsApp and Telegram to share these non-consensual fabricated nude images. The fake images were then used to extort at least one victim on Instagram for real nude images or money.

Telegram claims to actively moderate harmful content on their platform, including the distribution of child sexual abuse material. They remove content that violates their terms of service based on proactive monitoring and user reports. WhatsApp stated that they would treat this situation the same as any kind of child sexual abuse material and would ban those involved while reporting them to the National Center for Missing & Exploited Children.

Professor Clare McGlynn, an expert on violence against women and girls, described the incident as a direct abuse of women and girls by technology specifically designed to exploit them. The team behind the app responded to ABC News, stating that their intention was to make people laugh and show that nudity should not be a source of shame. They claimed to have protections in place for photos of people under 18, blocking users from uploading such photos after two attempts.

Experts warn that it only takes a photo, an email address, and a few dollars to create hyper-realistic non-consensual deepfakes. ABC News reviewed the nudify app and found that it offered both a free service through Telegram and a premium paid service. Payment methods such as Visa, Mastercard, and Paypal were initially listed but were removed after ABC News reached out to the app’s team.

Parra and his wife, Dr. Miriam Al Adib Mendiri, reported the incident to local police after their daughter confided in them. Mendiri used her social media following to denounce the crime publicly, leading to more victims coming forward. Some of the perpetrators are under 14 years old and will be tried under minor criminal law. Investigations are ongoing.

Experts like McGlynn argue that the focus should be on how search platforms rank non-consensual deepfake imagery and the apps that facilitate their creation. They believe that search platforms should de-rank nudify apps without consent. Dan Purcell, founder of Ceartas, agrees, stating that apps designed to unclothe unsuspecting women have no place in society or search engines.

Google responded by saying that they actively design their ranking systems to avoid shocking people with harmful or explicit content. They have protections in place to help people impacted by involuntary fake pornography and allow individuals to request the removal of pages containing such content. Microsoft’s Bing search engine also allows the easy searchability of websites containing non-consensual deepfake imagery. Microsoft prohibits the distribution of non-consensual intimate imagery on their platforms and services.

The implications of new technology are far reaching and cause for concern. Artificial Intelligence (AI) fueled mobile apps, capable of creating ‘virtual nudes’ of young girls, have recently become a cause of grave concern for Spanish police.

The new mobile app, which has caught the attention of authorities, generates realistic 3D images of people that do not exist. It is suspected that the app is being used to create lifelike images of young girls, which can be used for malicious purposes. According to police sources, the app exploits the advances in technology, especially artificial intelligence, with the intention of creating images that appear to be naked minors.

The app works by using pictures of a face, and then manipulating them by applying several techniques available in the market. One of the most commonly used techniques is to detect skin tone, body position, facial features, and hair style in order to generate an image. It is highly concerning, as the final image can be precise and very lifelike.

The police fear that the app may be used to fuel the unlawful trade in child pornography and lead to grooming or abuse of minors. With the proliferation of technology, there are many dangers to children that they may not be aware of, and this app is one of them. Parents and guardians should be aware of the potential risks that this app may present to them, and take measures to protect their children from such exploitation.

Moreover, appropriate measures should be taken to curb the spread of such apps and any platforms that accept their use. Strict legislation on the matter is necessary, and it is also essential to increase the public awareness in order to protect those at risk. It is hoped that the Spanish police will take the necessary steps to protect minors from the dangers of this technology.